Shared Brain: Achieving Zero-Wait States with Optimistic UI

Table of Contents

- The Interface: Decoupling Rules

- The Logic Mirror Handshake

- Reconciliation: When Friday becomes Saturday

- About the Author

The Interface: Decoupling Rules

The core of this strategy is treating your business logic like a universal socket.

Imagine if every time you bought a new hair dryer, you had to call an electrician to solder the wires directly into your home’s electrical grid. That’s tight coupling. If you want to move the hair dryer or upgrade it, you have to rip out the drywall every time.

In code, soldering your business logic directly creates the same mess. Instead, we use an interface—a universal socket—that defines the shape of the data we need. The logic shouldn’t care if it’s plugged into a real production database or a local frontend cache.

What is a Shared Brain?

A shared brain is not a microservice. If you put this logic in a microservice, the frontend still has to call it over a network, introducing the exact latency and uncertain loading states we’re trying to avoid.

Architecturally, this pattern is known as Universal (or Isomorphic) Business Logic, or in Domain-Driven Design, a Shared Kernel.

Practically, it is a package that both your frontend and backend import and run locally. It serves as a single source of truth living in its own library. In a standard TypeScript monorepo, it looks like this:

packages/brain: Pure business rules and math. Side-effect free. No database calls, no browser APIs.apps/client: Imports the shared brain and plugs it into local UI state for a high-fidelity frontend.apps/server: Imports the same shared brain and plugs it into the database for the final source of truth.

export interface DataProvider {

checkStock(id: string): Promise<number>;

}

export class DeliveryBrain {

constructor(private dataSource: DataProvider) {}

async calculate(itemId: string) {

const stock = await this.dataSource.checkStock(itemId);

// Logic: If in stock, it's today; otherwise, it's Saturday.

return stock > 0 ? "Friday" : "Saturday";

}

}

The Logic Mirror Handshake

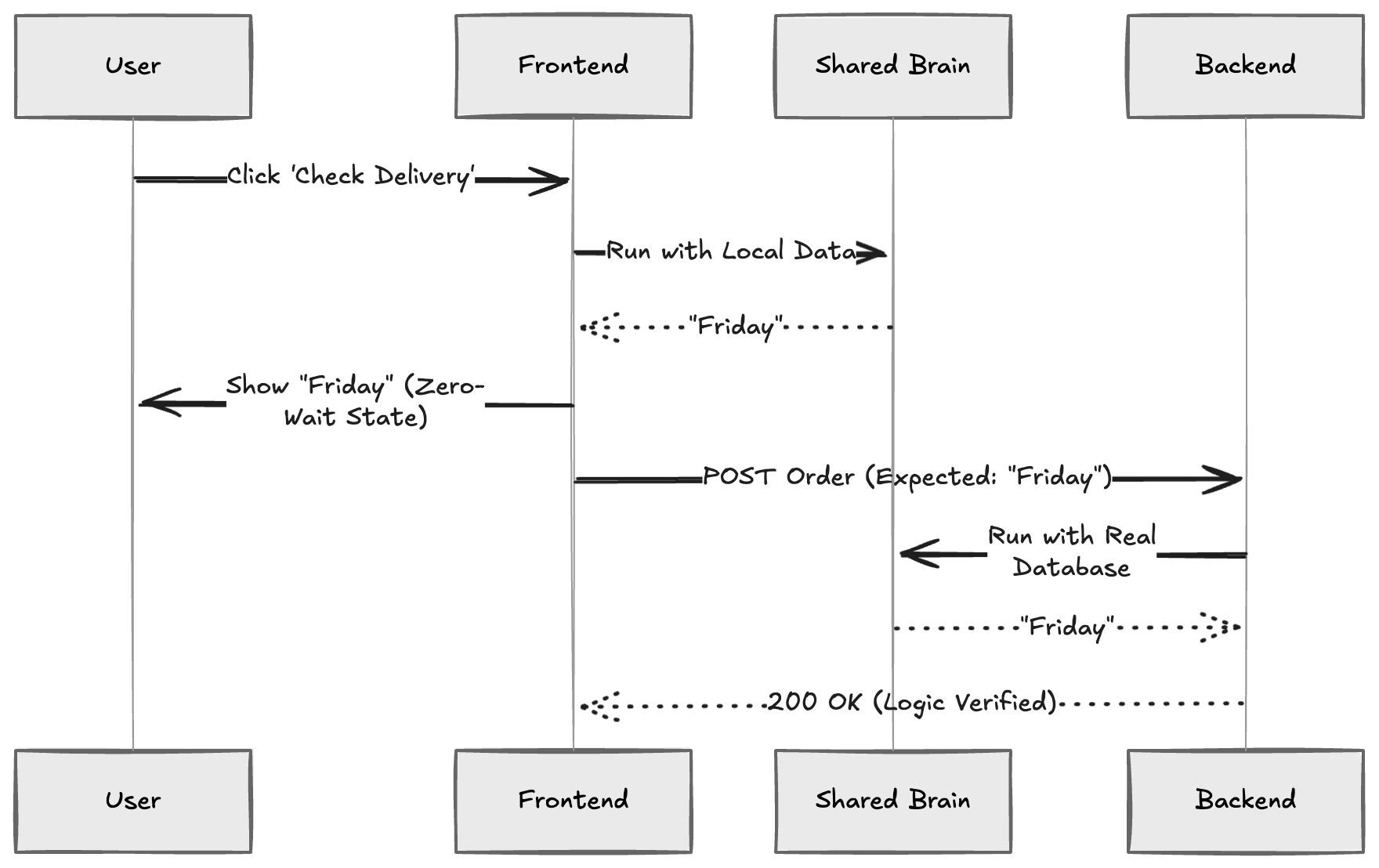

Because the frontend and backend share identical code, they become mirrors of each other. This effectively removes the perception of network lag, creating a state of perceived zero latency.

When a user clicks a button, the frontend executes using the shared brain immediately using local data. It displays the result instantly and then sends that “expected result” to the backend for verification.

Reconciliation: When Friday becomes Saturday

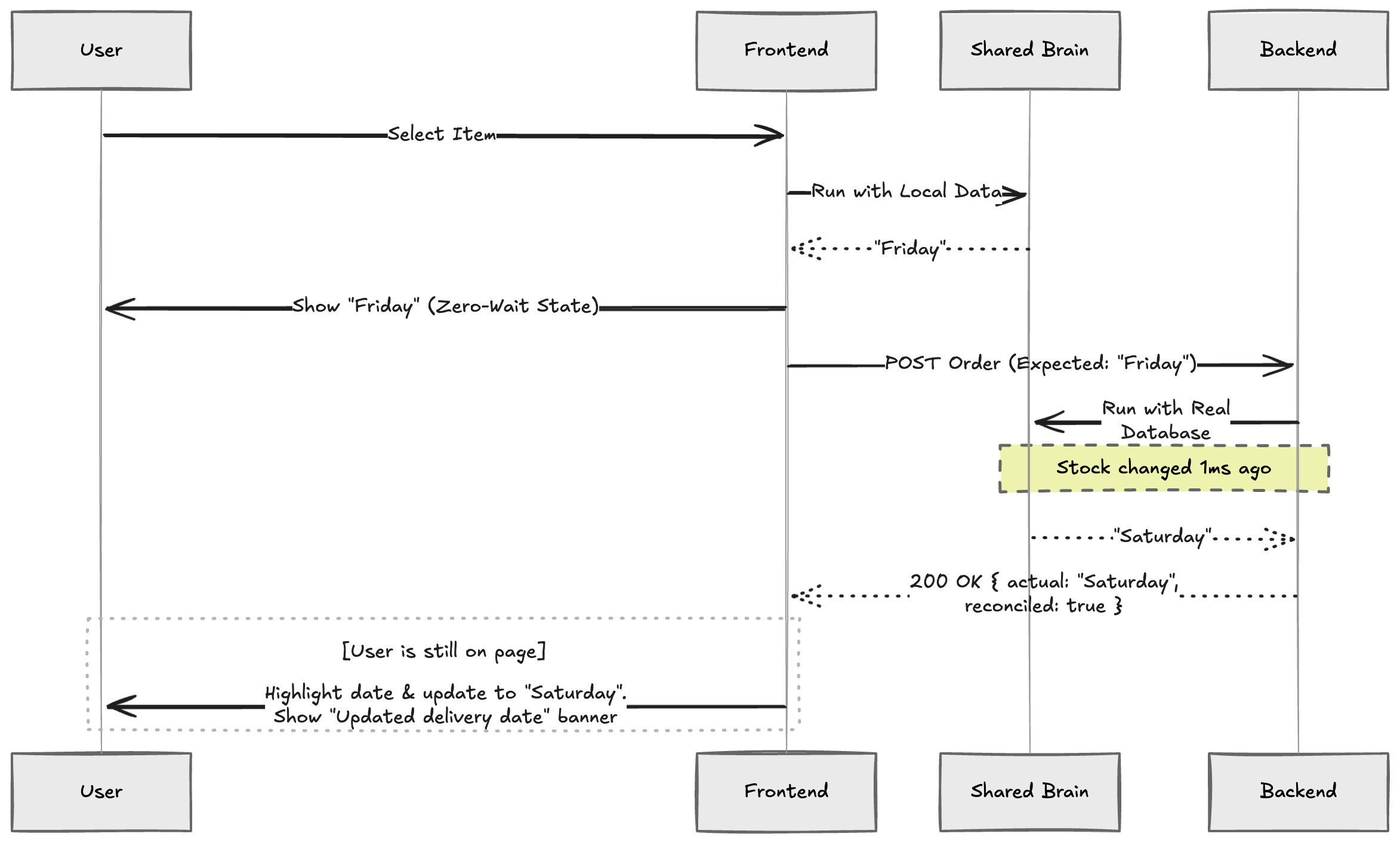

What happens if the mirror breaks? Maybe the last item in stock was sold in the 100ms between the user’s click and the API request.

The backend detects this discrepancy, known as logic drift, and sends the actual result back. The frontend must then negotiate this correction based on perceptual latency:

- Imperceptible Drift (<100ms): If the server verifies the data faster than the user can focus their eyes, we simply update the value. To the user, it feels like the initial load.

- Perceptible Drift (>100ms): If the user has had time to read the optimistic result (e.g., “Friday”), we cannot just silently swap the text. We must update the value and trigger a visual cue, like a highlight flash or a toast notification, to ensure the user notices the correction.

This distinction is what separates a high-fidelity interface from a dark pattern.

Silently swapping data after the user has read it is a form of UI gaslighting. It erodes trust. By visibly highlighting the drift, we avoid the “bait and switch” trap. We convert a potential error into a moment of system honesty, showing the user that while we prioritize their speed, we value the truth more.

Stop soldering your rules to your infrastructure. Build a shared brain, define your interfaces, and start building for a zero-wait state.

About the Author

I’m Rachel Cantor, a product engineer with over 14 years of experience building production systems. I plan and implement technical architecture that requires a knack for detail and a focus on high-fidelity user experiences. Currently seeking contract opportunities.

Feel free to reach out to me on bear.ink or LinkedIn if you’re looking to build something sharp. 🙌